- Published on

- •1 min read

Essential Routing & Protection Mechanisms for Scalable Microservices

- Authors

- Name

- Pulathisi Kariyawasam

Introduction

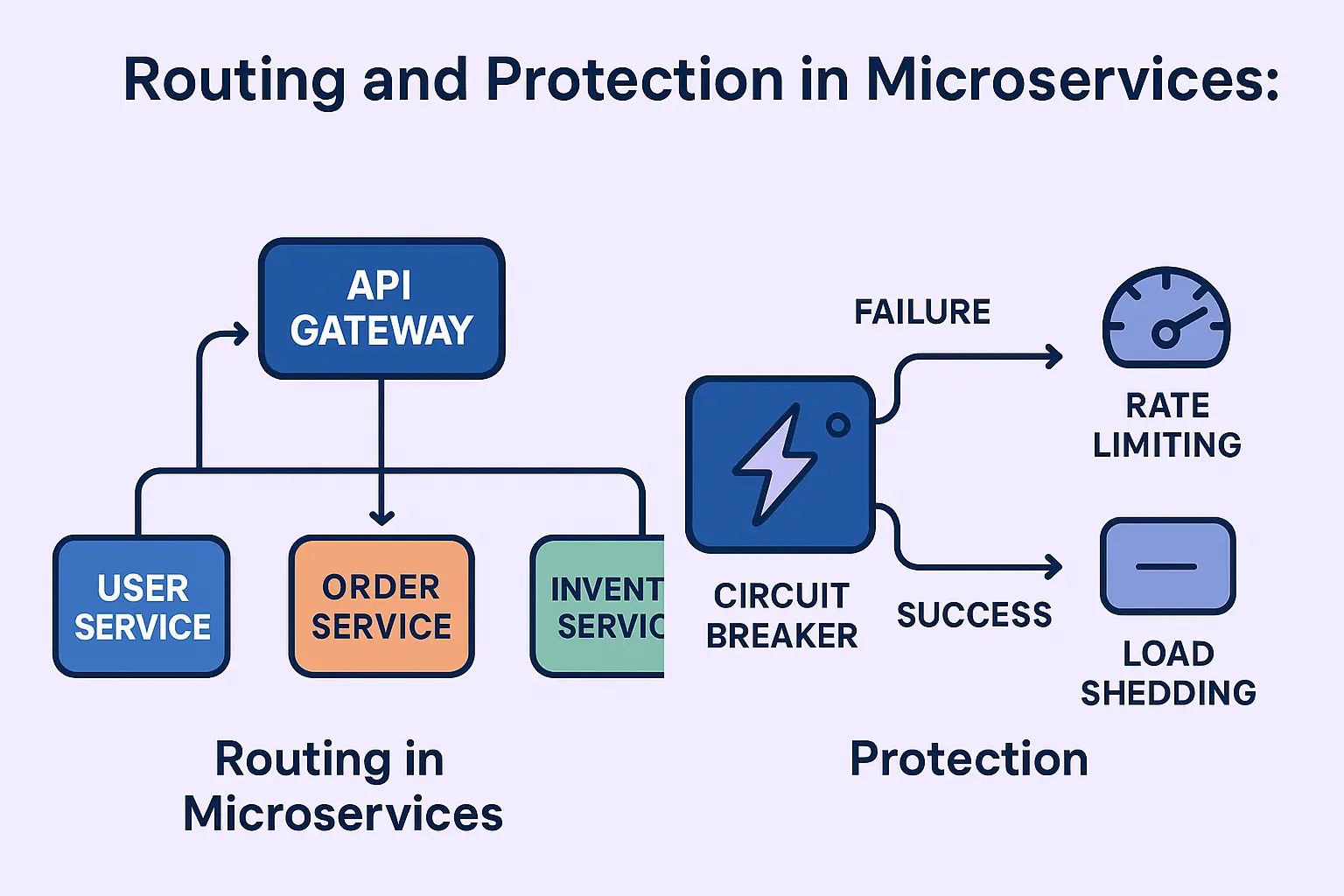

In a microservices architecture, routing and protection are key for smooth communication and stable performance.

Understanding how routing directs traffic and how protection prevents cascading failures helps you design systems that are both scalable and resilient.

Routing in Microservices

Routing ensures each incoming request reaches the correct microservice.

It can depend on URL paths, domains, or dynamic discovery systems.

Here are the most common routing patterns with examples:

1. URL-Based Routing (Path-Based)

Description:

Routes requests based on the URL path.

Example:

/users/45→ User Service/orders/123→ Order Service

Benefit:

Simple, intuitive, and easy to implement with tools like Nginx or API Gateway.

2. Host-Based Routing

Description:

Uses the domain or subdomain to decide the destination.

Example:

api.users.example.com→ User Serviceapi.orders.example.com→ Order Service

Benefit:

Ideal when services are separated by domain for security or organizational ownership.

3. API Gateway Routing

Description:

A single entry point that manages all requests, routing them to appropriate microservices.

It also handles features like authentication, caching, and request transformation.

Example:

/products→ Product Service/cart→ Cart Service

Benefit:

Centralizes control and simplifies the client experience.

Popular Tools: Kong, Nginx, AWS API Gateway, Traefik.

4. Service Discovery (Dynamic Routing)

Description:

Services register themselves dynamically.

Routers or clients discover available instances in real time.

Example:

When a new Order Service instance starts, it registers in Consul or Eureka, and routers automatically send requests there.

Benefit:

Improves scalability and resilience, adapting as services appear or go offline.

Protection in Microservices

Protection patterns prevent failures from spreading across the system and keep services healthy under heavy load.

Let’s break down some key protection mechanisms:

Circuit Breaker vs Rate Limiting vs Load Shedding

| Pattern | What It Does | Example Scenario | Purpose |

|---|---|---|---|

| Circuit Breaker | Temporarily stops requests to a failing service | If Payment Service keeps timing out, block calls for a few seconds | Prevent cascading failures |

| Rate Limiting | Limits how many requests clients can send | Each user can make max 100 requests per minute | Protect backend and ensure fair usage |

| Load Shedding | Drops low-priority requests when system is overloaded | Reject non-critical API calls under high CPU load | Maintain system availability during spikes |

More on Rate Limiting

Purpose:

Prevent a single client or service from overwhelming your system.

Example:

An API allows 500 requests per hour per IP.

Anything beyond that returns HTTP 429 – Too Many Requests.

Implementation Tools:

Nginx, API Gateway, Redis-based limiters, or service-level throttling.

More on Load Shedding

Purpose:

Actively shed less important traffic during overload, ensuring critical features stay online.

Example:

An e-commerce site may drop search queries if order processing is under stress.

Tip:

Combine load shedding with monitoring tools like Prometheus or Grafana to detect overload conditions in real time.

Timeouts and Retries

Description:

Timeouts set a limit on how long to wait for responses.

Retries reattempt failed requests automatically.

Example:

Retry calling the Inventory Service 3 times, with each attempt waiting 2 seconds max.

Note:

Too many retries can make load worse. Always use backoff strategies.

Exponential Backoff and Jitter

Description:

Gradually increase retry delays and add randomness to avoid synchronization storms.

Example:

Wait 1s → 2s → 4s → plus random 0–500ms delay.

Benefit:

Prevents multiple services from retrying at the same time after failure recovery.

Bulkhead Pattern

Description:

Isolate resources like threads or database connections for each service or function.

Example:

Limit threads accessing Payment Service so Order Service remains unaffected even if Payment slows down.

Benefit:

Improves fault isolation and prevents resource starvation.

Hedging (Request Replication)

Description:

Send multiple identical requests to different instances and use the quickest response.

Example:

If one instance is slow, another faster one replies first — improving user experience.

Tradeoff:

Consumes extra network and compute resources, so use carefully.

Final Thoughts

Combining these routing and protection patterns helps build a resilient, scalable microservices ecosystem.

- Routing ensures requests find the right path.

- Protection keeps the system stable under pressure.

Together, they form the foundation of modern distributed systems.